Abstract

The ability to generate an array of plausible outputs for a single input has profound implications for dealing with inherent ambiguity in visual scenarios. This is evident in scenarios where diverse semantic segmentation annotations for a single medical image are provided by various experts. Existing methods hinge on probabilistic modelling of representations to depict this ambiguity and rely on extensive multi-output annotated data to learn this probabilistic space.

However, these methods often falter when only a limited amount of ambiguously labelled data is available, which is a common occurrence in real-world applications. To surmount these challenges, we propose a novel framework, termed as P²SAM, that leverages the prior knowledge of the Segment Anything Model (SAM) during the segmentation of ambiguous objects. Specifically, we delve into an inherent drawback of SAM in deterministic segmentation, i.e., the sensitivity of output to prompts, and ingeniously transform this into an advantage for ambiguous segmentation tasks by introducing a prior probabilistic space for prompts.

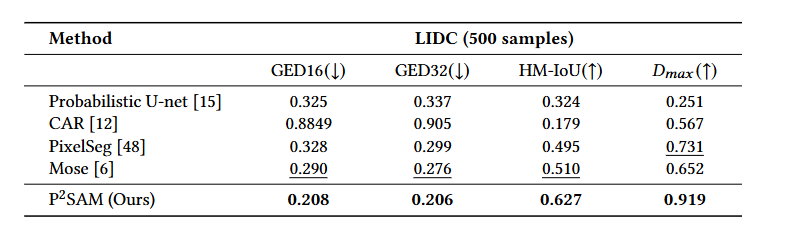

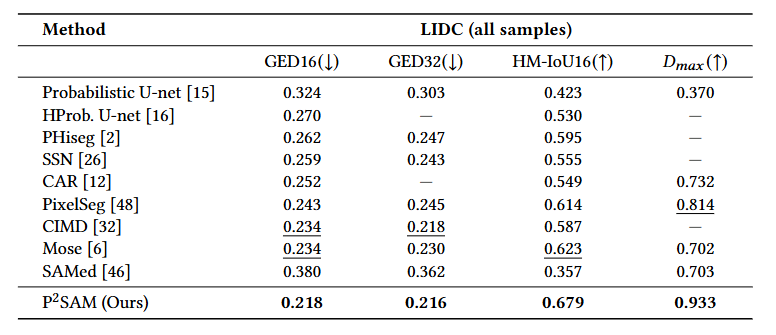

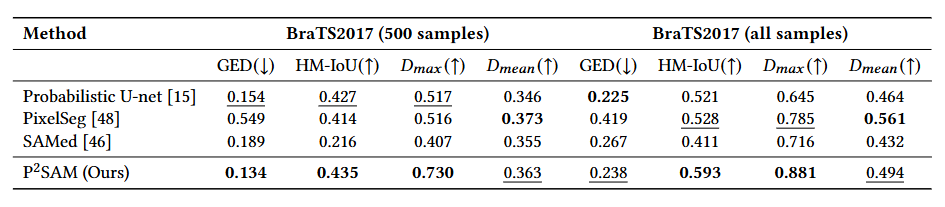

Experimental results demonstrate that our strategy significantly enhances the precision and diversity of medical segmentation through the utilization of a small number of ambiguously annotated samples by doctors. Rigorous benchmarking experiments against state-of-the-art methods indicate that our method achieves superior segmentation precision and diversified outputs with fewer training data (using simply 5.5% samples, +12% Dmax).

The P²SAM signifies a substantial step towards the practical deployment of probabilistic models in real-world scenarios with limited data.

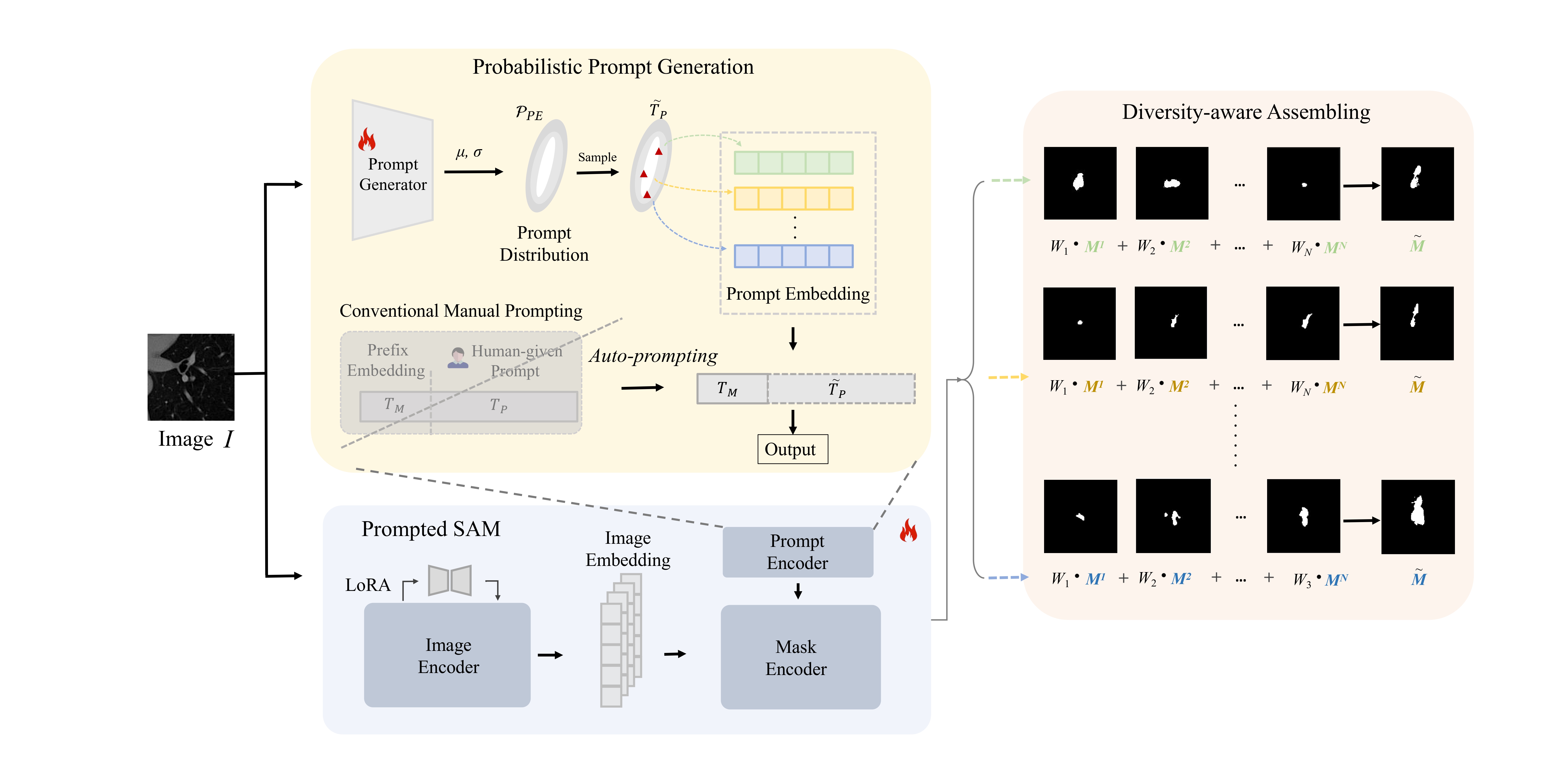

Pipeline

Overview of P²SAM: (a) We first lift the conventional SAM prompting to the probabilistic space, by leveraging a network targeting at generating prompt distribution. (b) Then we sample the prompt embedding from the probabilistic latent space and instill it into SAM to unlock the capacity of SAM in "one-to-many" ambiguous segmentation. (c) We carefully design a diversity-aware assembling that perceives the inherent diversity in SAM and turn it to ensembled ambiguous output.

Experiments

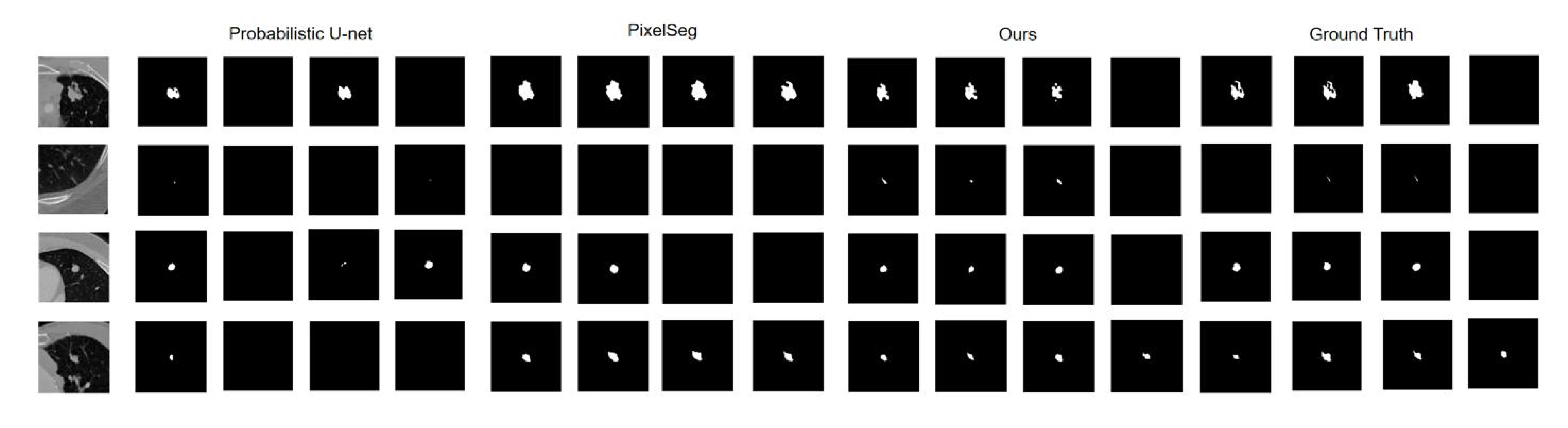

Results on LIDC-IDRI

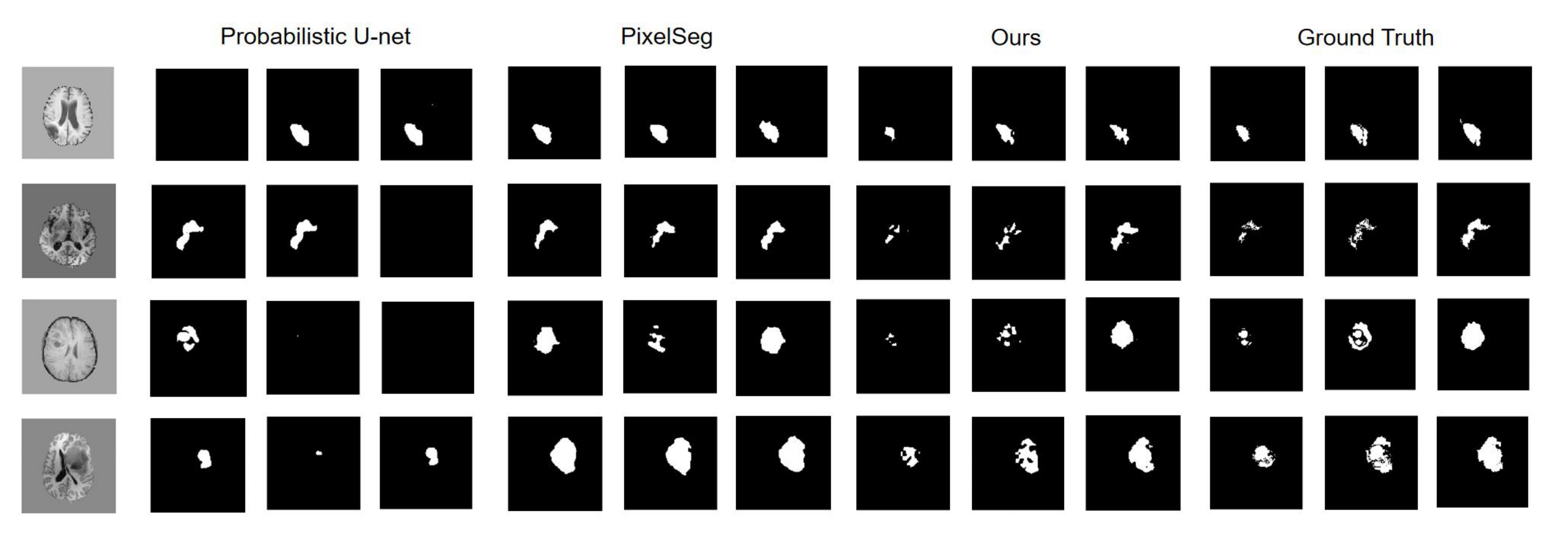

Results on BraTS2017

BibTeX

@article{huang2024p2sam,

title={P²SAM: Probabilistically Prompted SAMs Are Efficient Segmentator for Ambiguous Medical Images},

author={Huang, Yuzhi and Li, Chenxin and Lin, Zixu and Liu, Hengyu and Xu Haote and Liu, Yifan and Huang, Yue and Ding, Xinghao and Yuan, Yixuan},

journal={arXiv preprint arXiv:2407.01301},

year={2024}

}